GGUF model format - downloading a model

Tip:

Refer to the system requirements section and decide on which method of loading the model you wish to use, if you haven't done so already.

Machine learning is a dynamic field, and there are many standards and quantization formats, both current and already obsolete. For the sake of this guide, we will consider the most versatile of them: GGUF.

GGUF is a file format used by one of the most popular back-end libraries, llama.cpp. Models quantized using this format are capable of being loaded in RAM. Part of the model can also be offloaded to VRAM for extra overall speed. Thanks to this, GGUF remains one of the most popular model formats in use today. Models quantized in this format are usually denoted with a -GGUF suffix on huggingface.co:

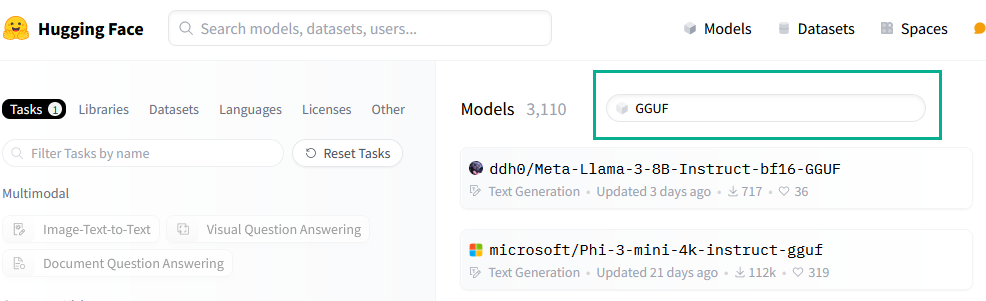

You can also use the filter function to limit the displayed models to this format only:

Browse through the available models and find one that suits your needs. Take into account the amount of memory you will need to load the model.

Tip:

Remember, you can start with a small, 7B model and upgrade to a larger one if it turns out your computer can handle it. Refer to the memory requirements table provided by most GGUF quant uploaders.

Keep in mind that quants below Q5 are generally not recommended. Try to pick a Q5_K_M quant to achieve the best size/quality ratio.

When you've picked a model and the associated quant size, from the model card go to Files and versions, then select the quant you wish to download. After the download is finished, move the .gguf file to your text-generation-webui directory, dropping it in the models folder.