Recommended first models

This section contains pre-selected, simple models for those who wish to try text-generation-webui with minimal research.

Tip:

Refer to the system requirements section and decide on which method of loading the model you wish to use, if you haven't done so already.

The models below will work with any combination of VRAM and RAM, or GPU memory and system memory. The models are also all meant for general use - much like ChatGPT or other similar services.

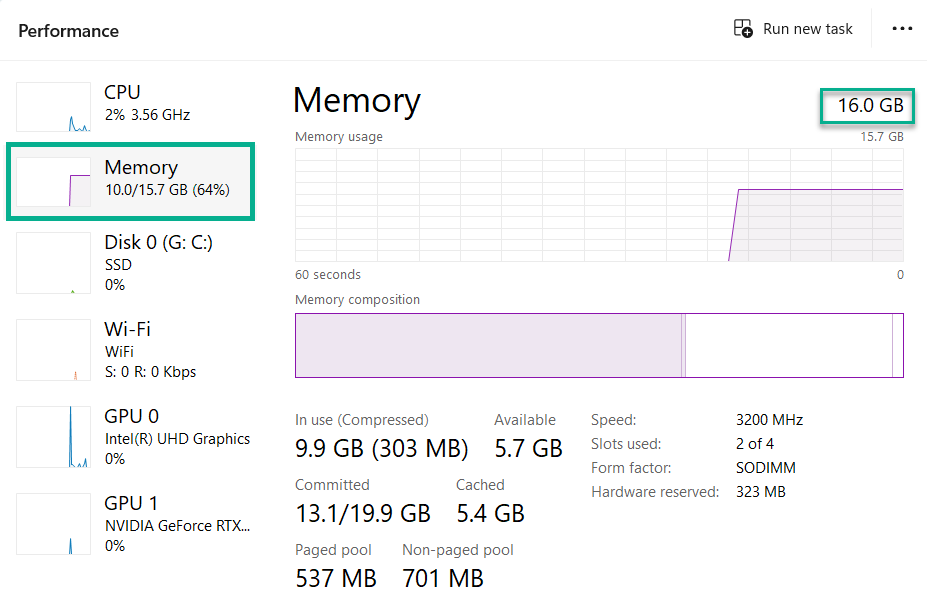

If you're not sure how much RAM you have available, follow these steps:

- Open the Start menu

- Search for Task Manager and launch it

- Go to Performance > Memory

- Note the number located in the top right corner. That's the amount of RAM you have available on your computer.

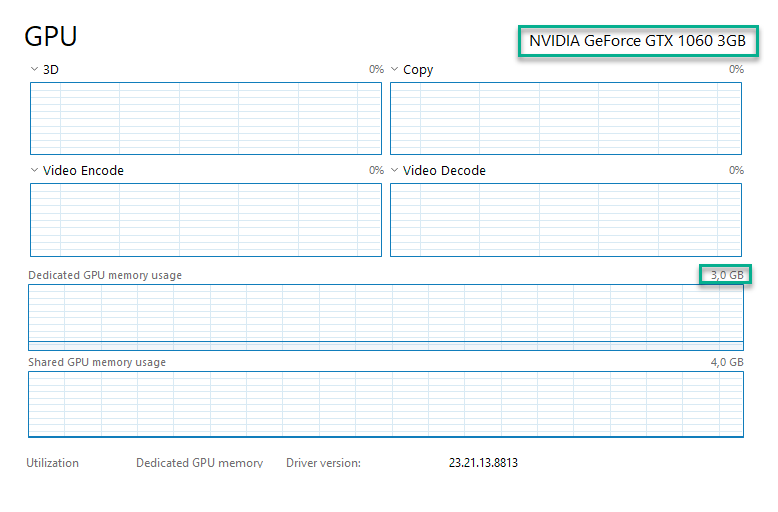

If you're not sure how much VRAM you have available, follow these steps:

- Open the Start menu

- Search for Task Manager and launch it

- Go to Performance, then select your dedicated GPU - it will be either GPU0 or GPU1

- Note the number located in the top right corner of the Dedicated GPU memory usage box. That number represents the amount of VRAM you have available.

For computers with at least 8GB of RAM, RAM+VRAM or VRAM:

You can run a q5_K_M quant of Crataco/Nous-Hermes-2-Mistral-7B-DPO-imatrix-GGUF. The chat format for this model is ChatML.

For computers with 16GB or more of RAM, RAM+VRAM or VRAM:

You can comfortably run a q5_K_M quant of TheBloke/Nous-Hermes-2-SOLAR-10.7B-GGUF. The chat format for this model is ChatML.

For computers with at least 32 GB of RAM, RAM+VRAM or VRAM:

You can run a q4_K_S or K_M quant of mradermacher/Nous-Hermes-2-Mixtral-8x7B-DPO-i1-GGUF. The chat format for this model is ChatML.

After you've picked a model and the associated quant size, from the model card go to Files and versions, then select the download arrow next to the quant you wish to download. Once the download is finished, move the .gguf file to your text-generation-webui directory, dropping it in the models folder.