System requirements

While it is possible to run a local LLM on almost any device, you will need the following for the best experience:

- At least 16GB of RAM.

- A modern processor, supporting AVX2 instructions. If your processor was manufactured after 2011, it almost certainly supports this standard.

- Optional, but highly recommended: a dedicated NVIDIA or AMD graphics card, with at least 8GB of VRAM.

The more powerful your computer, the stronger models you can run locally on your machine. There are three main methods of running local LLMs, and each depends on the amount of system memory you have:

GPU inference: The fastest and most preferred method is to load the model entirely into your VRAM (your graphics card's memory). You will need a dedicated graphics card.

The amount of VRAM you have available will determine the size of the model you can load. You should assume 8GB of VRAM to be the minimum, and 24GB VRAM to be optimal.

Did you know?

You can install multiple graphics cards into your system to maximize the amount of VRAM available for AI-related tasks.

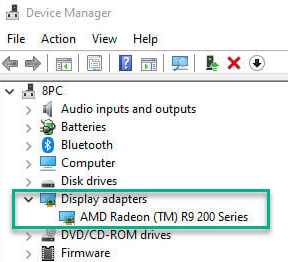

If you're not sure if you have a dedicated or integrated graphics card, follow these steps:

- Open the Start Menu

- Search for Device Manager

- Select Display Adapters

- If you see "Intel Integrated Graphics", you do not have a dedicated graphics card. If you see AMD or NVIDIA, you are good to go! If you see both, that means your computer has both dedicated and integrated graphics - which is also good for our purposes.

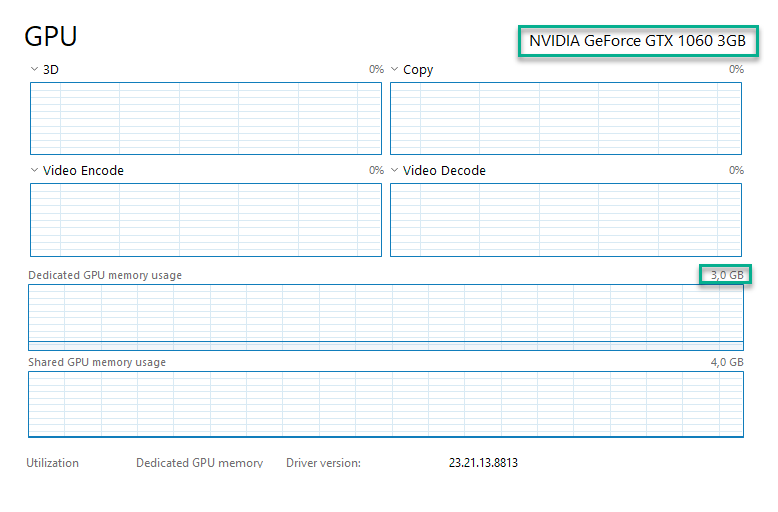

If you're not sure how much VRAM you have available, follow these steps:

- Open the Start menu

- Search for Task Manager and launch it

- Go to Performance, then select your dedicated GPU - it will be either GPU0 or GPU1

- Note the number located in the top right corner of the Dedicated GPU memory usage box. That number represents the amount of VRAM you have available.

GPU + CPU inference: This method will use both your VRAM and RAM to load the model. Offloading parts of the model to your system memory will allow you to load larger models at a significant performance penalty. For this, you will sum up both your VRAM and RAM. You can assume that a minimum of 16GB of memory in total will be necessary.

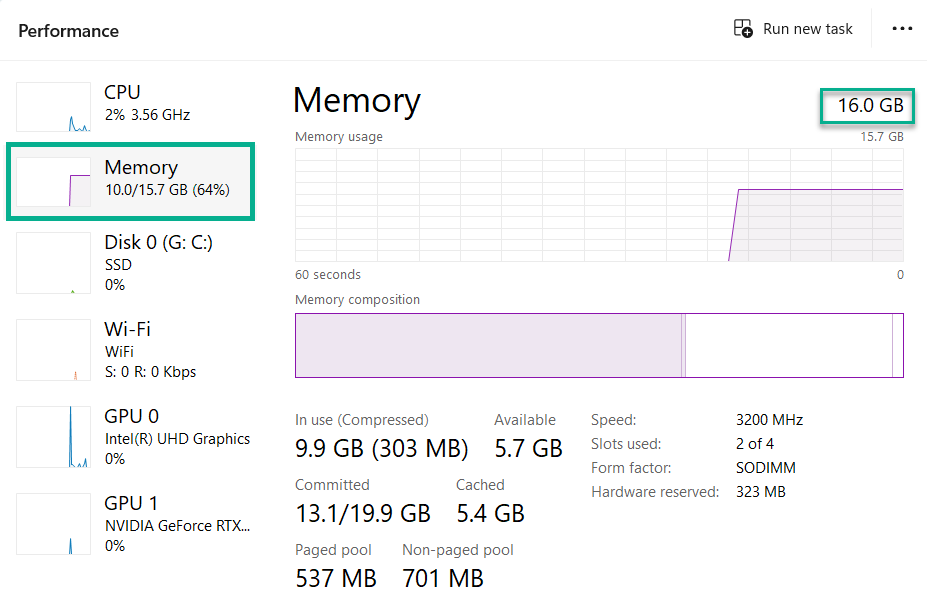

If you're not sure how much RAM you have available, follow these steps:

- Open the Start menu

- Search for Task Manager and launch it

- Go to Performance > Memory

- Note the number located in the top right corner. That's the amount of RAM you have available on your computer.

CPU-only inference: This method uses your CPU and RAM for inference and is the slowest by far, but also the cheapest method in terms of necessary hardware. Assume at least 16GB of RAM at a minimum.