Model parameters

Unlike online services such as ChatGPT, Gemma or Claude, local LLMs offer you the possibility of manually adjusting their parameters. You can make the AI's responses more or less random and predictable, change the output length and more.

Instruction templates

Instruction templates are a template for the format in which your prompts should be sent to the AI. After loading a model in text-generation-webui, go to the Parameters > Instruction template tab

Usually, the model's author includes the required template in the model's metadata, allowing text-generation-webui to automatically set the correct template. If this doesn't happen, you will need to locate the recommended template's name in the model's card on huggingface.co.

Setting the template manually is simple, as text-generation-webui comes with all the most often used templates pre-installed. Select the desired template from the drop-down menu, and confirm with Load.

Generation parameters

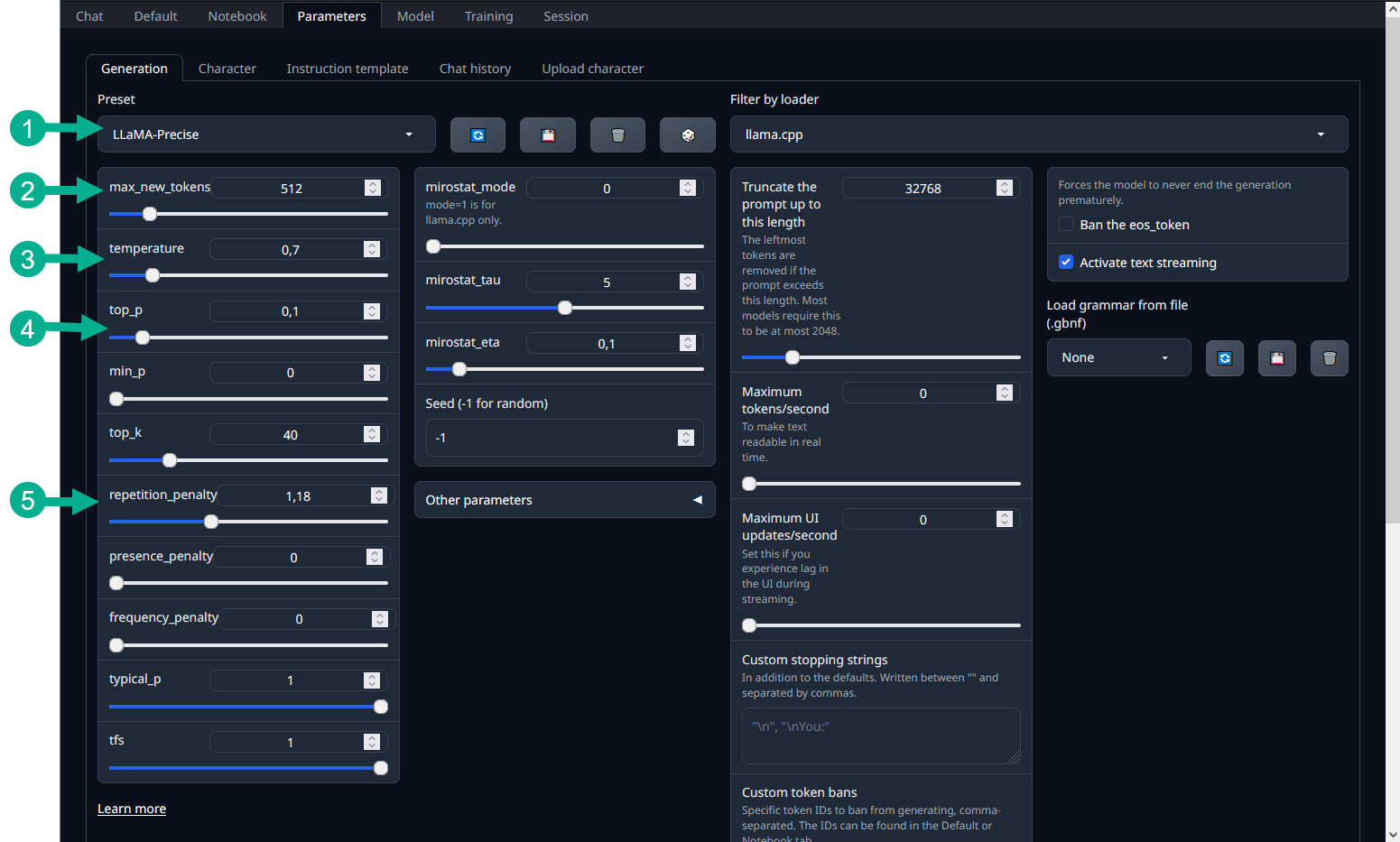

Model generation parameters allow you more freedom to experiment with your local model. After loading a model, go to Parameters > Generation. You will see the following interface:

- Premade presets – for Chat-GPT-like generation, LLaMA-Precise is recommended. Simply select the preset from the drop-down menu.

- max_new_tokens – controls the length of the model's output.

- temperature – influences the model's "creativity". The higher this number, the more randomness will be introduced to the token selection. Keep this number between 0.7 and 1.2. For general AI assistant chats, the lower end of this spectrum is recommended.

- top_p – cuts off the least probable next tokens during the generation. A top_p of 0.1 means that the tokens comprising the top 10% of probability are considered.

- Repetition penalty – change this number if your model keeps repeating itself or using the same words too often. Between 1.1 and 1.2 is best.

Feel free to experiment with these options and see how the model's responses change!